The task of circle fit is a very popular one and it often makes its appearance in machine vision applications. Also the problem of solving a task in a least squares sense comes out repeatedly. As it turns out the former could be used to nicely illustrate snags of the latter. Today I would like to discuss what does it mean to fit a circle in a least square sense and if it leads to an unambiguous solution. I will base my example on two rather old but very informative papers:

- Gander W. Golub G.H. Strebel R. Least–Squares Fitting of Circles and Ellipses BIT Numerical Mathematics, 34(4) pp. 558–578, 1994

- Coope L.D. Circle fitting by linear and nonlinear least squares Journal of Optimization Theory and Applications, 76(2) pp. 381–388, 1993

First, lets define the problem at hand. The task is to find a radius and center of a circle best fitting a set of points on the plane. The points may not necessarily lie perfectly on a circumference of a circle, may not be evenly distributed and their number may vary, however it is always greater than the number of sought for parameters.

The algebraic approach

The most straightforward approach is to consider an algebraic representation of a circle on a plane:

![]()

where:

![]()

Having m data points we can build an overdetermined set of linear equations

![]()

![]()

![Rendered by QuickLaTeX.com \[B = \left[\begin{array}{cccc} x_{1}^2 + y_{1}^2 & x_{1} & y_{1} & 1 \\ \vdots & \vdots & \vdots & \vdots \\ x_{m}^2 + y_{m}^2 & x_{m} & y_{m} & 1 \end{array}\right]\]](http://ztrw.mchtr.pw.edu.pl/wp-content/ql-cache/quicklatex.com-6a0350886507aebc847857508301a8cf_l3.png)

If the number of points

![]()

![]()

![]()

![]()

According to the 1st referenced paper the solution can be found from the right singular vector of matrix

![]()

![]()

Now the circle parameters are easily defined as:

![]()

To see how this approach works in practice we can easily code it in Matlab. For this test, as well as for upcoming ones, we are going to use a small data set as defined in the referenced paper.

![]()

P = [1 7; 2 6; 5 8; 7 7; 9 5; 3 7];

B = [(P.*P)*[1 1]‘, P(:,1), P(:,2), ones(6, 1)];

[U,S,V] = svd(B);

d = diag(S);

res = V(:, find(d == min(d)));

xc = –res(2)/(2*res(1));

yc = –res(3)/(2*res(1));

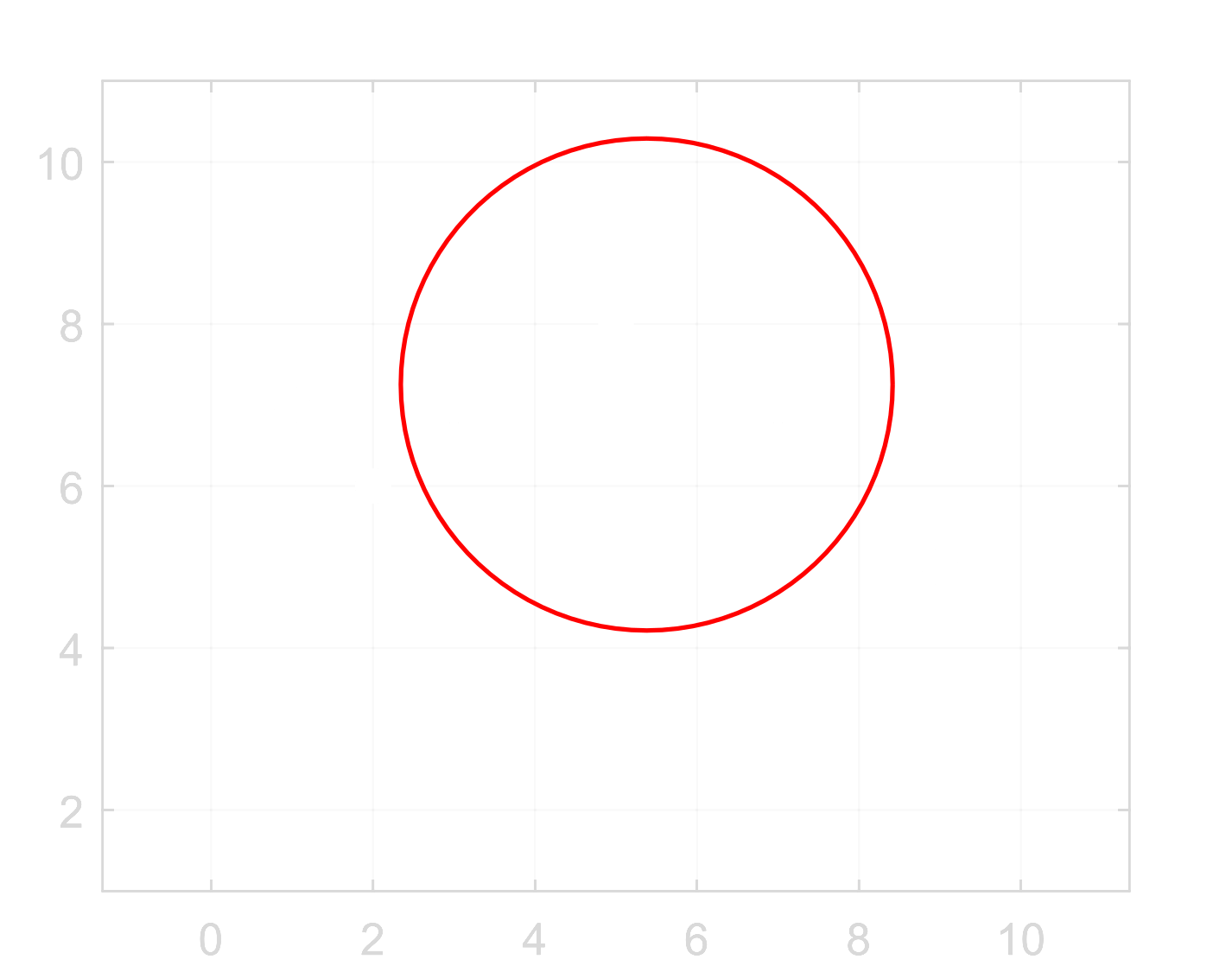

r = sqrt((res(2)^2 + res(3)^2)/(4*res(1)^2) – res(4)/res(1));The script builds the

![]()

Now, the question arises if this is the desired outcome and why we have got a result other than expected? It turns out that the algebraic approach yields a circle lying the closest to the input set of points but does not take into account its geometric relationships. Therefore the result is most likely not satisfactory, especially if the number of sampled points is small or they are not evenly distributed. Is there another way to fit a circle in a more reasonable way?

The geometric approach

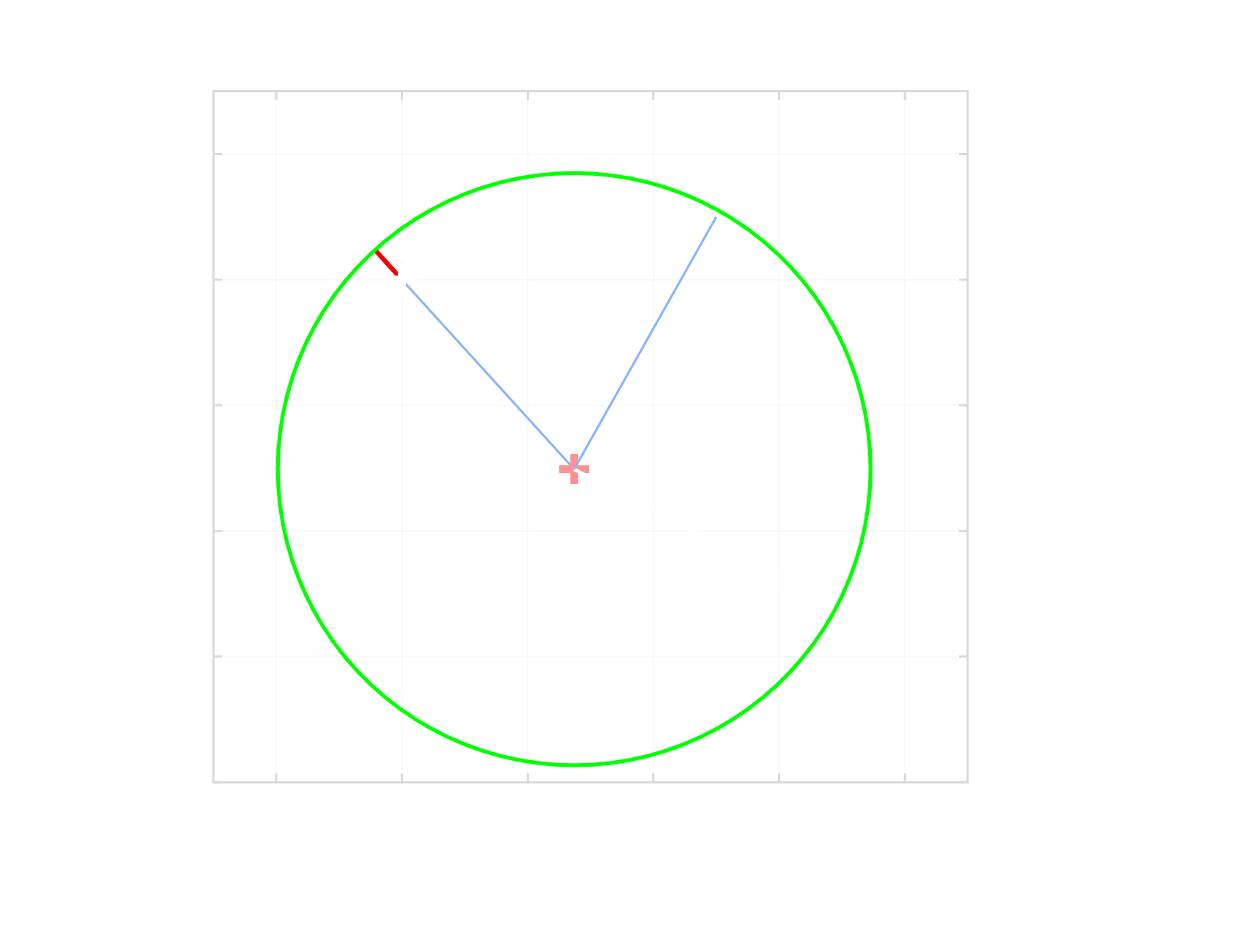

The problem with the algebraic approach was that we really didn’t realize what we were actually minimizing. Now we will try to define the cost function, so that it minimizes sum of squares of distances between the desired radius and a sector between the center and each sampled point, as indicated in the picture.

This residual for a data point

![]()

![]()

![]()

or after expansion:

![]()

Thus, the cost function to minimize is the sum of residuals for all data points:

![]()

This time the task is described well in the geometric sense but we face a different difficulty. The resulting least squares problem is a non-linear one, so that there is no a straightforward analytical solution. Instead we have to use an iterative approach. In this case we can take advantage of the Gauss-Newton algorithm and, simultaneously, explain this approach in more details.

Gauss-Newton algorithm

The algorithm is a modification of the Newton’s method in optimization which minimizes a sum of squares, just like the one we came upon in the circle fit problem. One advantage over the Newton’s method is that it does not require to calculate partial second derivatives of the objective function (the Hessian). Just the first derivatives (the Jacobian) is sufficient.

In data fitting problem the components of the minimized sum are the residuals which are differences between data points and values predicted by a model. In our case these are precisely the squared differences that we defined above. They describe deviations between the modeled radius of a fitted circle and actual distance between a data point an the circle center.

The Gauss-Newton algorithm approximates the solution step by step by updating the sought for model parameters in each iteration according to the procedure:

![]()

where

![]()

![]()

In case of an overestimated system, where there are more data points than model parameters, the Jacobian matrix is rectangular and thus non-invertible. Therefore a pseudoinverse is used instead:

![]()

In order to find the Jacobian we need to calculate partial derivatives with respect to all model parameters (

![]()

![Rendered by QuickLaTeX.com \[ J(x_{c}, y_{c}, r) = \left( \begin{array}{ccc} \frac{\delta d_1(x_{c}, y_{c}, r)}{\delta x_{c}} & \frac{\delta d_1(x_{c}, y_{c}, r)}{\delta y_{c}} & \frac{\delta d_1(x_{c}, y_{c}, r)}{\delta r} \\ \vdots & \vdots & \vdots \\ \frac{\delta d_m(x_{c}, y_{c}, r)}{\delta x_{c}} & \frac{\delta d_m(x_{c}, y_{c}, r)}{\delta y_{c}} & \frac{\delta d_m(x_{c}, y_{c}, r)}{\delta r} \end{array} \right) \]](http://ztrw.mchtr.pw.edu.pl/wp-content/ql-cache/quicklatex.com-2eb98aac78c24592f66357c611a7e041_l3.png)

Which in case of our residuals

![]()

![Rendered by QuickLaTeX.com \[ J(x_{c}, y_{c}, r) = \left[ \begin{array}{ccc} \frac{x_{c} - x_{1}}{\sqrt{(x_{c} - x_{1})^2 + (y_{c} - y_{1})^2}} & \frac{y_{c} - y_{1}}{\sqrt{(x_{c} - x_{1})^2 + (y_{c} - y_{1})^2}} & -1 \\ \vdots & \vdots & \vdots \\ \frac{x_{c} - x_{m}}{\sqrt{(x_{c} - x_{m})^2 + (y_{c} - y_{m})^2}} & \frac{y_{c} - y_{m}}{\sqrt{(x_{c} - x_{m})^2 + (y_{c} - y_{m})^2}} & -1 \end{array} \right) \]](http://ztrw.mchtr.pw.edu.pl/wp-content/ql-cache/quicklatex.com-2aa1e6082a7bf310fb64522107e2d232_l3.png)

Now we are ready to implement and test this solution.

Implementation

To start calculations we have to define initial values for the center and radius of the circle. For this purpose we can use results of the algebraic fit from the first example and store them as a vector.

xc = 5.3794;

yc = 7.2532;

r = 3.0370;

res = [xc; yc; r];The iterative procedure of Gauss-Newton algorithm can be implemented as follows:

max_iters = 20;

max_dif = 10^(-6); % max difference between consecutive results

for i = 1 : max_iters

J = Jacobian(res(1), res(2), P);

R = Residual(res(1), res(2), res(3), P);

prev = res;

res = res - J\R;

dif = abs((prev - res)./res);

if dif < max_dif

fprintf('Convergence met after %d iterations\n', i);

break;

end

end

if i == max_iters

fprintf('Convergence not reached after %d iterations\n', i);

endFirst we define two exit conditions, either if the result between two consecutive iteration barely changes or if the maximum number of iteration has been reached before convergence. Next, we use helper functions to calculate the Jacobian and the residuals vector. After that, we find the new approximation of the result and check the exit conditions.

The Jacobian and Residual helper functions are implemented below:

function J = Jacobian(xc, yc, P)

s = size(P);

denom = sqrt((xc - P(:,1)).^2 + (yc - P(:,2)).^2);

J = [(xc - P(:,1))./denom, (yc - P(:,2))./denom, -ones(s(1), 1)];

endfunction R = Residual(xc, yc, r, P)

R = sqrt((xc - P(:,1)).^2 + (yc - P(:,2)).^2) - r;

endThe final result reached after 11 iterations is shown in the following figure.

This time the outcome is in line with the common sense because the data points are located along an arc of a circle. This result however does not come without an extra cost. The disadvantage of a non-linear least squares optimization is the fact that it guarantees to find a local minimum only. Therefore the final result depends on the choice of a starting point and a poor shot can lead to a lack of convergence at all. Let’s see if there is anything that we can do about it.

Linearized geometric approach

Previously we defined the squares of residuals as

![]()

![]()

After expansion of the first term and rearrangement we get:

![]()

Now, remembering that we seek for the center of a circle c and its radius r, we can rearrange the equation further:

![]()

![]()

And make a clever substitution of variables:

![Rendered by QuickLaTeX.com \[ \boldsymbol{z} = \left[\begin{array}{c}2x_c\\ 2y_c\\ r^2 - \boldsymbol{c}^T \boldsymbol{c}\end{array}\right] \]](http://ztrw.mchtr.pw.edu.pl/wp-content/ql-cache/quicklatex.com-59a77afd9236d5aa16b513719a790943_l3.png)

Finally, we get the residual defined as:

![]()

which is linear with respect to the variable z. Now, the sum of squares of the residuals

![]()

![]()

which is equivalent to solving a linear system

![]()

![]()

![]()

![]()

![]()

It has a unique solution if at least 3 columns in the matrix

![]()

![]()

One final step is to restore the original variables, so that:

![]()

![]()

![]()

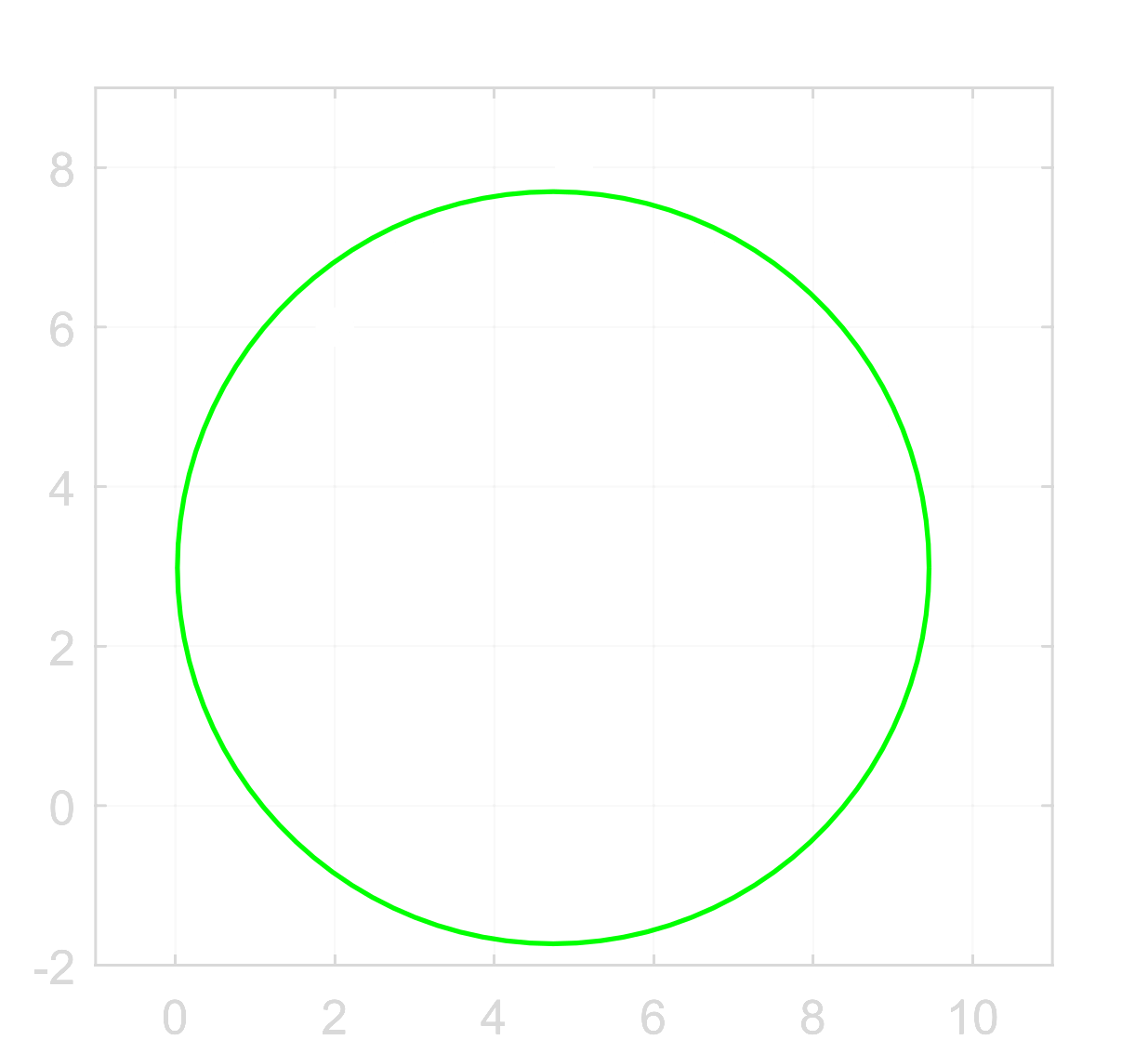

Now is the time to see how the linearized geometric approach works in practice. Here is the Matlab code:

[n, m] = size(P);

y = [P, ones(n, 1)]\sum(P.*P, 2);

x = 0.5*y(1:m);

r = sqrt(y(m+1) + x'*x);Below you can see the plot of the fitted circle:

Conclusions

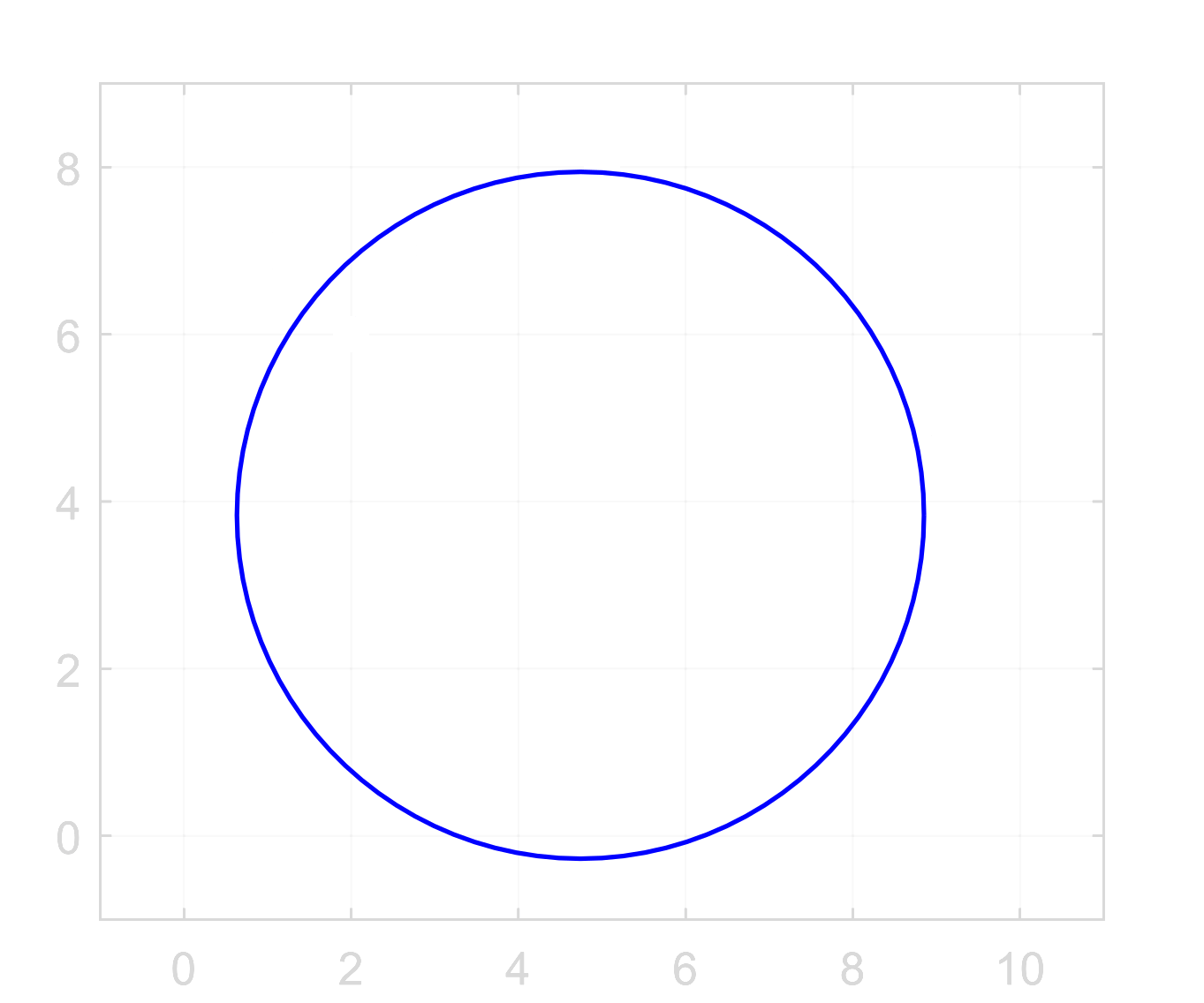

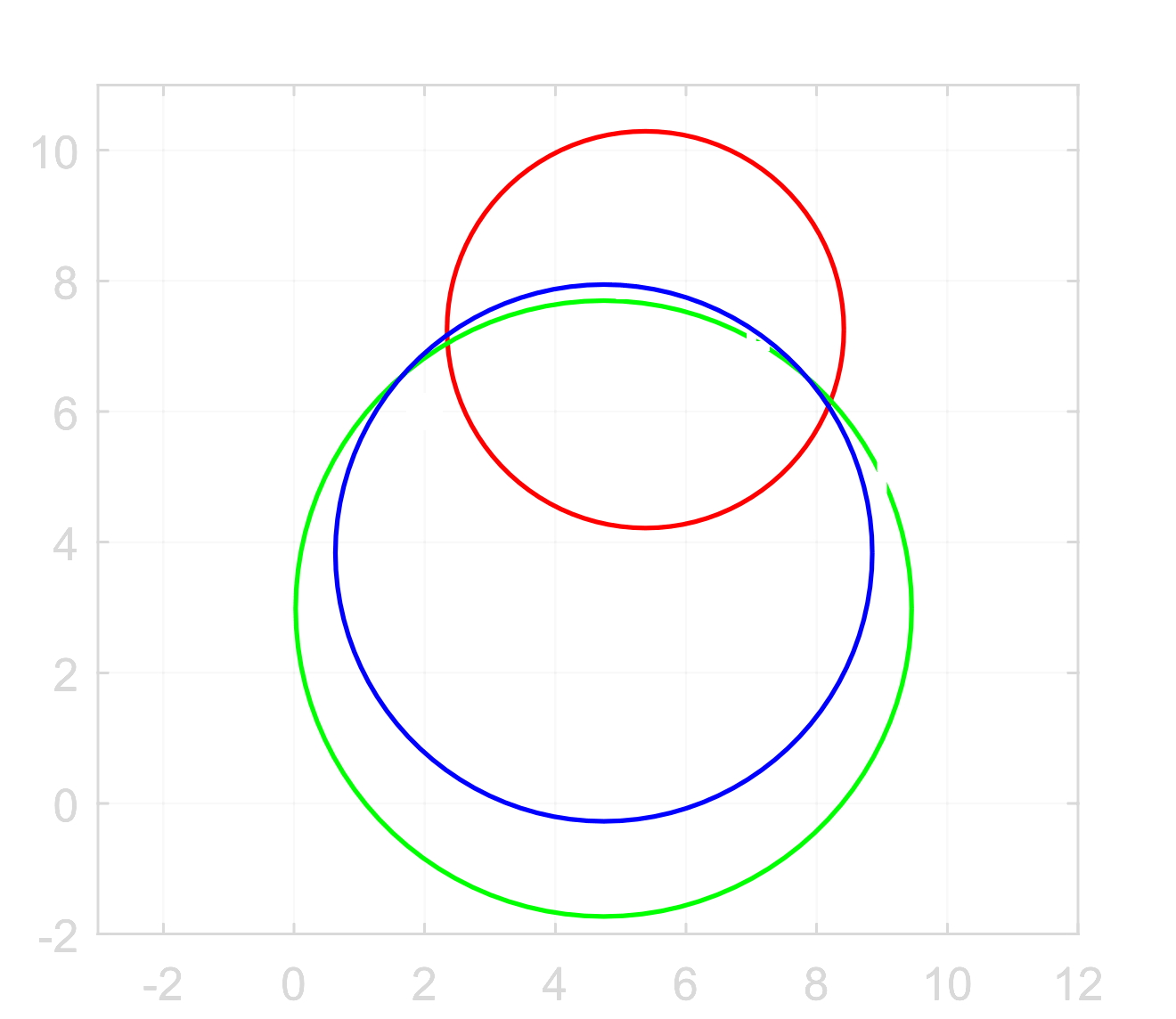

We discussed three different approaches to least squares circle fit. Each of them presents its own advantages and shortcomings. Their combined results are shown in the figure below. It is clearly visible that they may differ greatly. In a real world scenario however, the number of data points would be significantly larger which would most likely diminish the scale of differences. Nevertheless it is worth knowing they persist.

The algebraic method provides a simple solution, yet it does not define the cost function in a geometric context, therefore its result could be unexpected. Usually it provides the first approximation for an iterative algorithm.

The geometric approach formulates the problem correctly in the geometric sense but yields a non-linear least squares problem which is much harder to solve. It requires an iterative solver which is numerically expensive, affected by a choice of a starting point and does not guarantee to converge. However, once the initial conditions are well defined it provides the most expected solution.

The linearized approach overcomes the shortcomings of the geometric one at the cost of skewing the result by changing the cost function.

A conclusion to remember is that a fit in a least squares sense is a family of optimization methods which take different approach in formulating the problem at hand and use different mathematical means to solve it. It is always important to realize what task do we want to solve and select the right tool to do it.